ChatGPT's impact on the media landscape: navigating the future of AI and journalism

WE interview ChatGPT and how it will impact the media

Updated: February 10, 2022:

Microsoft added ChatGPT integration into Bing. We added screenshots below of how results are generated and displayed.

Kristin Carpenter interviews Adam Ruggiero: Channel Mastery Podcast - Ep. 204: GearJunkie’s Adam Ruggiero on AI, bots, and more>

We recently sat down with ChatGPT, an AI tool created by OpenAI, for a discussion on the impact of AI on the media landscape. We asked questions on how AI will change the media, affect consumers, and guide purchase decisions through search queries.

At Verde, we are always keeping up with the latest trends and technology to make sure our staff and clients are at the forefront of the consumer decision journey, from brand discovery to final touch points before purchase. Understanding the direction of technology allows us to be part of the evolving journalism and media landscape.

The current state of "search" is broken, as anyone can quickly publish content and Google struggles to display the best results for our questions. AI has the potential to analyze all the content available and provide more helpful answers, but still based on content published.

Important take-aways about this type of AI (neural network):

It cannot distinguish between fact or fiction / true or false

It cannot form an opinion; responses (still) are based on the information, disinformation, misinformation that has been published

It is still a search engine, put the next level: For example, current search engines take into account the following/proceeding one-two words around your search topic, ChatGPT takes in an additional three to four words and communicate the aggregated results using natural language processing.

Brands and media can use ChatGPT/Bing and Google/Bard to help brainstorm topics, trends, and proofread

Over the past few years, media consolidation has resulted in the closure of many magazines, leaving niche audiences without their preferred content. This is why we are seeing an increase in independent publications serving these specific audiences, who are highly relevant to both readers and brands in the industry. We anticipate that long-form, in-depth journalism will become a trusted source for AI, and we are ready for it.

Relationships between journalists, editors, publishers, and PR professionals are expected to deepen as media houses tap into AI to generate story ideas and revisit archived content. We learned in the Wall Street Journal, the Arena Group, for instance, aims to support content workflows, video creation, newsletters, sponsored content, and marketing campaigns through AI, not replace journalists.

As Ross Levinsohn, Arena Group’s CEO, stated, "It's not going to replace the art of creating content. It's giving the content creators, whether they're writers or social creators, real efficiency and real access to the archives we have."

While AI is great at creating and surfacing content, it lacks the ability to add a brand's voice and tone, so pay a great deal of attention to this when/if you use AI for creating content. It could very easily hurt a brand or publisher.

For example, we ran a tweet from Wendy’s, known for its savage tweets, through ChatGPT:

Verde’s prompt to ChatGPT:

Write a funny response to this tweet: @wendys how much does a big mac cost?

ChatGPT’s response:"Well, a Big Mac costs about as much as keeping a small country afloat... but with way fewer toppings!" #WendysWittyReplies

You can see how the voice and tone is very different from Wendy’s normal snarky tone and does not make a whole lot of sense.

We need to be hypervigilant and start to look around the corner and ensure brands and products are being perceived and written about as intended. Helping sculpt and mold your brands ethos and reputation will be harder, yet more important, now more than ever.

Verde can help you build out a communications and perception strategy that focuses on your brand, products, consumers and brand reputation in this changing landscape…after all, if your brand isn't providing the necessary information, someone else will.

Now on to our interview!

How will ChatGPT affect journalism, writers, and the media?

ChatGPT can have the following effects on journalism, writers, and the media:

Content generation: ChatGPT can be used to generate articles, news summaries, and other types of content. This may reduce the need for human writers and reporters in certain circumstances.

Data analysis: ChatGPT can analyze large amounts of data and provide insights that journalists can use to inform their reporting.

Fact-checking: ChatGPT can be trained on large amounts of data to automatically fact-check information and help journalists verify the accuracy of information.

Personalization: ChatGPT can be used to generate personalized content based on user preferences, potentially increasing engagement with news and media content.

While ChatGPT may automate certain aspects of journalism and writing, it is also likely to create new opportunities for human journalists and writers who can leverage the technology to produce more in-depth, insightful content.

Our take:

We believe that the utilization of ChatGPT/AI will provide media outlets with increased capacity to produce articles, presenting opportunities for brand storytelling and product placement. However, this also necessitates a heightened focus on fact-checking by editors and writers.

Images provide by Rossignol.

How does ChatGPT create recommendations for skis?

To create recommendations for skis using ChatGPT, the following steps can be followed:

Data collection: Collect data on skis such as their specifications, user reviews, and ratings.

Model training: Train a ChatGPT model on the collected data. This can involve inputting a description of a user's skiing preferences, such as their level of experience, preferred ski terrain, and more, and having the model generate a recommendation for a ski that matches those preferences.

Recommendation generation: Use the trained model to generate recommendations for skis. This can be done by inputting a user's preferences into the model and having it generate a recommendation based on the data it has been trained on.

The recommendations generated by ChatGPT can be based on a combination of factors such as the user's preferences, skiing ability, and ski terrain. By using a trained language model, recommendations can be made more personalized and relevant to the individual user.

Our take:

This is a huge brand opportunity. While many outdoor brands are doing a much better job informing customers about the tech specs, very few excel at telling the story about who the product is for. Having this information on your site, media kits, and in stories will help provide information AI needs to create a recommendation.

Added February 10, 2023:

Now that Microsoft and Bing have started integrated ChatGPT into search, we see how it is pulling in information, sources, and specifics. Media placements are driving the responses here. We have also noticed the capabilities of the Bing version is limited vs the Open.AI ChatGPT.

How would ChatGPT determine the best GORE-TEX coat for someone conducting pre-purchase research?

To determine the best GORE-TEX coat for someone conducting pre-purchase research using ChatGPT, the following steps can be followed:

Data collection: Collect data on GORE-TEX coats such as their specifications, user reviews, and ratings.

Model training: Train a ChatGPT model on the collected data. This can involve inputting a description of a user's needs, such as the type of weather they will be wearing the coat in, the activities they will be doing, and more, and having the model generate a recommendation for a GORE-TEX coat that matches those needs.

Recommendation generation: Use the trained model to generate recommendations for Gore-Tex coats. This can be done by inputting a user's needs into the model and having it generate a recommendation based on the data it has been trained on.

The recommendations generated by ChatGPT can be based on a combination of factors such as the user's intended use, desired features, and budget. By using a trained language model, recommendations can be made more personalized and relevant to the individual user, helping them to make an informed purchasing decision.

Our take:

Again, ensuring your brand has good information about who your product is for will be the name of the game. This is an opportunity to ensure your customer service channels and UX experience on your website. We expect to see consumer decisions engines become even more prevalent in the media and in Shopify apps for example.

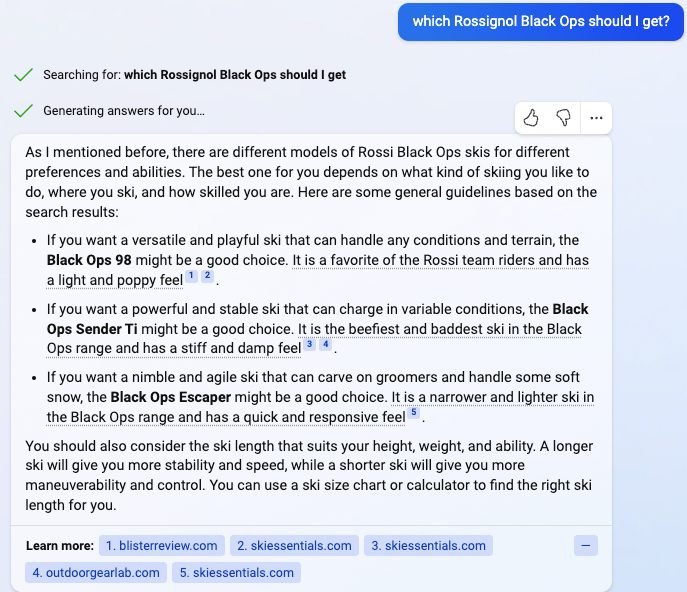

Bing ChatGPT shows us results and recommendations around a product with multiple versions for different purposes - we used the example of the Rossignol Black Ops ski line.

When the search query changes to a purchase decision, We see the results change to include shopping ads. Another interesting note, notice how it changes ‘Rossi’ to ‘Rossignol’ in our test.

How much weight will user reviews and journalist reviews factor into recommendations that ChatGPT provides?

The weight given to user reviews and journalist reviews in recommendations generated by ChatGPT will depend on the specific training data and objectives of the model.

Model training: If the model has been trained on a large amount of user reviews and journalist reviews, then those reviews are likely to have a significant influence on the recommendations generated by the model.

Objectives: If the objective of the model is to prioritize user opinions, then user reviews are likely to carry more weight in the recommendations. Conversely, if the objective is to prioritize expert opinions, then journalist reviews are likely to carry more weight.

Bias: It's also important to note that the weight given to user reviews and journalist reviews can also be influenced by any biases in the training data. For example, if the model is trained on a skewed sample of user reviews, the recommendations generated may not accurately reflect the opinions of the general population.

Overall, the weight given to user reviews and journalist reviews in recommendations generated by ChatGPT will depend on the specific training data and objectives of the model, as well as the presence of any biases in the training data.

Our take:

Having robust media reviews from trusted sources will be important, but also consumer reviews. Brands should investigate how they can enhance and leverage user reviews on brand websites and media outlets. Encouraging thorough, in depth customer reviews and media reviews will drive trust and confidence in your brand and products.

Does ChatGPT plagiarize?

No, ChatGPT does not plagiarize. It is a machine learning model trained on a vast corpus of text data and generates responses based on that training data. The responses are not taken directly from any one source, but are instead generated based on patterns in the training data.

Our take:

At this point, it may generate what it calls “original content,” but based on our tests, we have seen responses pull in copyrighted material - so we all need to be careful. This is going to open up an entire new field of law. We saw it pull in trademarks taglines and other instances that might get you in trouble.

How do you cite content generated from AI / ChatGPT?

To properly cite content generated by ChatGPT, it is important to follow standard citation practices for any source material used in the generation of the content.

Attribution: If the content generated by ChatGPT includes information from existing sources, those sources should be clearly attributed. This can be done through the use of in-text citations or footnotes.

Reference list: A reference list or bibliography should be included at the end of the content that lists all of the sources used, including ChatGPT, in accordance with the citation style being used (e.g. MLA, APA, Chicago, etc.).

Formatting: The format of the citation for content generated by ChatGPT will depend on the citation style being used. However, it should clearly indicate the source of the information, such as the name of the model (e.g. OpenAI's ChatGPT), the date the content was generated, and any relevant parameters or inputs used in the generation process.

In general, it is important to be transparent about the use of ChatGPT and to give proper credit to any sources used in the content generation process, including the technology itself.

Our take:

Update: We are now started to see how Microsft and Open.AI are addressing citing sources in its latest release. Please take a look at the screen shots below for examples.

Currently, ChatGPT does NOT cite its sources. This is very concerning. We hope to see this corrected in new releases. However, we are still weary - even people who work at Open.AI and the developers are not 100% sure how ChatGPT forms its answers. 😅

We are now seeing where ChatGPT in Bing is pulling in information as it lists the websites at the bottom of the results. We are interested in how publishers will feel about having their content crawled and displayed, essentially give the user less reason to visit the source website.

You can see how Microsoft is citing sources and when you click or hover over the citation, the sourced articles are displayed.

Is AI content immoral?

The morality of AI content is a complex and debated topic. Some people argue that AI-generated content, such as fake news, can be harmful and immoral because it misleads people and undermines trust in information sources. Others argue that AI-generated content is just a tool and that it is the responsibility of users to verify the information and use it ethically.

Ultimately, the morality of AI content depends on how it is used and the intent of those who use it. It is important for individuals and organizations to be aware of the potential risks and to use AI-generated content responsibly, in a manner that aligns with ethical and moral principles.

Our take:

It is only a matter of time before people figure out how to weaponize new technologies (and make money off of it) and steal the work of writers, authors, artists, coders, and more; the need for regulation is very apparent.

In summary:

While this new technology is going to revolutionize the way people interact with the internet and each other, we see this as an opportunity to create compelling stories, refocusing our brands, products and staff to relentlessly focus on a customer.

Modeling is pointing towards an algorithm which acts like a ski shop or running shoe store employee: When a person walks into a store to by a pair of shoes or skis/snowboard, the employee starts asking specific questions such as, where do you ski, what kind of terrain, what ability level, what’s your budget, and so on, to ensure they can recommend the best product for you. Does your brand have that information on your website? Do media outlets have that information?

Providing the media with product specifications, and going beyond that by explaining the target audience and purpose of the product will resonate with both your customers and the media. These types of stories will win the ‘hearts and minds’ of the robot, too.

Example: 22/23 Rossignol Sender 106 Ti Plus by Blister>

In addition, uplifting people and stories is irreplaceable by robots (for now), an example of this is how Verde is Making News with Ambassadors. Creating experiences for consumers, brands, and media to come together can help facilitate those stories as we do with Launch Dispatch and our media experiential events, which will continue to evolve to meet the needs of our clients and consumers.

Marketers and media need to be careful, thorough, and continue to create original and trustful content that is highly relevant to a specific audience.

As Mark Schaefer said - may the most human company wins.

Please contact us at Verde to tell your story.

A portion of the blog was generated by OpenAI’s ChatGPT.